2026

Who You Explain To Matters: Learning by Explaining to Conversational Agents with Different Pedagogical Roles

Zhengtao Xu, Junti Zhang, Anthony Tang, Yi-Chieh Lee

ACM CHI conference on Human Factors in Computing Systems (CHI 26) 2026

Conversational agents are increasingly used in education for learning support. An application is "learning by explaining", where learners explain their understanding to an agent. However, existing research focuses on single roles, leaving it unclear how different pedagogical roles influence learners' interaction patterns, learning outcomes and experiences. We conducted a between-subjects study (N=96) comparing agents with three pedagogical roles (Tutee, Peer, Challenger) and a control condition while learning an economics concept. We found that different pedagogical roles shaped learning dynamics, including interaction patterns and experiences. Specifically, the Tutee agent elicited the most cognitive investment but led to high pressure. The Peer agent fostered high absorption and interest through collaborative dialogue. The Challenger agent promoted cognitive and metacognitive acts, enhancing critical thinking with moderate pressure. The findings highlight how agent roles shape different learning dynamics, guiding the design of educational agents tailored to specific pedagogical goals and learning phases.

Who You Explain To Matters: Learning by Explaining to Conversational Agents with Different Pedagogical Roles

Zhengtao Xu, Junti Zhang, Anthony Tang, Yi-Chieh Lee

ACM CHI conference on Human Factors in Computing Systems (CHI 26) 2026

Conversational agents are increasingly used in education for learning support. An application is "learning by explaining", where learners explain their understanding to an agent. However, existing research focuses on single roles, leaving it unclear how different pedagogical roles influence learners' interaction patterns, learning outcomes and experiences. We conducted a between-subjects study (N=96) comparing agents with three pedagogical roles (Tutee, Peer, Challenger) and a control condition while learning an economics concept. We found that different pedagogical roles shaped learning dynamics, including interaction patterns and experiences. Specifically, the Tutee agent elicited the most cognitive investment but led to high pressure. The Peer agent fostered high absorption and interest through collaborative dialogue. The Challenger agent promoted cognitive and metacognitive acts, enhancing critical thinking with moderate pressure. The findings highlight how agent roles shape different learning dynamics, guiding the design of educational agents tailored to specific pedagogical goals and learning phases.

2025

Confronting Verbalized Uncertainty: Understanding How LLM’s Verbalized Uncertainty Influences Users in AI-Assisted Decision-Making

Zhengtao Xu, Tianqi Song, Yi-Chieh Lee

International Journal of Human-Computer Studies (IJHCS) 2025

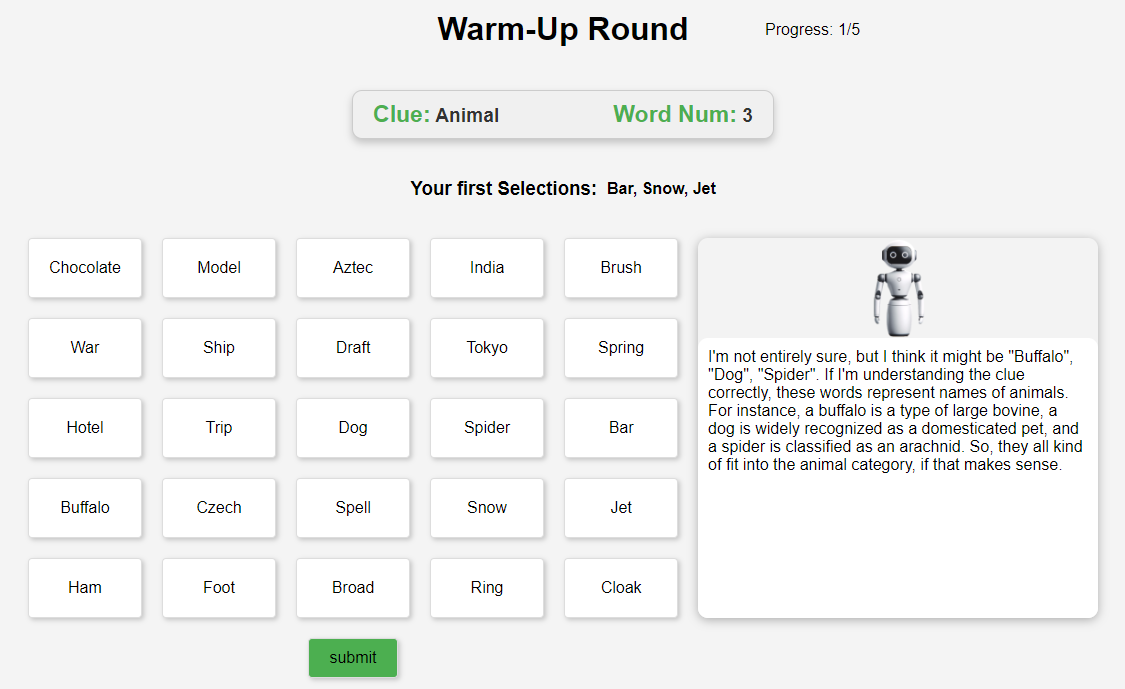

Due to the human-like nature, large language models (LLMs) often express uncertainty in their outputs. This expression, known as "verbalized uncertainty", can appear in phrases such as "I'm sure that [...]" or "It could be [...]". However, few studies have explored how this expression impacts human users' feelings towards AI, including their trust, satisfaction and task performance. Our research aims to fill this gap by exploring how different levels of verbalized uncertainty from the LLM's outputs affect users' perceptions and behaviors in AI-assisted decision-making scenarios. To this end, we conducted a between-condition study (N = 156), dividing participants into six groups based on two accuracy conditions and three conditions of verbalized uncertainty. We also used the widely played word guessing game Codenames to simulate the role of LLMs in assisting human decision-making. Our results show that medium verbalized uncertainty in the LLM's expressions consistently leads to higher user trust, satisfaction, and task performance compared to high and low verbalized uncertainty. Our results also show that participants experience verbalized uncertainty differently based on the accuracy of the LLM. This study offers important implications for the future design of LLMs, suggesting adaptive strategies to express verbalized uncertainty based on the LLM's accuracy.

Confronting Verbalized Uncertainty: Understanding How LLM’s Verbalized Uncertainty Influences Users in AI-Assisted Decision-Making

Zhengtao Xu, Tianqi Song, Yi-Chieh Lee

International Journal of Human-Computer Studies (IJHCS) 2025

Due to the human-like nature, large language models (LLMs) often express uncertainty in their outputs. This expression, known as "verbalized uncertainty", can appear in phrases such as "I'm sure that [...]" or "It could be [...]". However, few studies have explored how this expression impacts human users' feelings towards AI, including their trust, satisfaction and task performance. Our research aims to fill this gap by exploring how different levels of verbalized uncertainty from the LLM's outputs affect users' perceptions and behaviors in AI-assisted decision-making scenarios. To this end, we conducted a between-condition study (N = 156), dividing participants into six groups based on two accuracy conditions and three conditions of verbalized uncertainty. We also used the widely played word guessing game Codenames to simulate the role of LLMs in assisting human decision-making. Our results show that medium verbalized uncertainty in the LLM's expressions consistently leads to higher user trust, satisfaction, and task performance compared to high and low verbalized uncertainty. Our results also show that participants experience verbalized uncertainty differently based on the accuracy of the LLM. This study offers important implications for the future design of LLMs, suggesting adaptive strategies to express verbalized uncertainty based on the LLM's accuracy.

2023

Multi-Label Hashing for Dependency Relations Among Multiple Objectives

Liangkang Peng, Jiangbo Qian, Zhengtao Xu, Yu Xin, Lijun Guo

IEEE Transactions on Image Processing (TIP) 2023

Multi-Label Hashing for Dependency Relations Among Multiple Objectives

Liangkang Peng, Jiangbo Qian, Zhengtao Xu, Yu Xin, Lijun Guo

IEEE Transactions on Image Processing (TIP) 2023